Sharing a collection of tech related things I saved up over the week to read this morning.

Still number 1 across most dimensions, they must have heard I am leaving hence the drop to number 2 on the talent dimension 😉

https://evidentinsights.com/ai-index/

A friend that runs digital strategy for the Financial Times shared this, perhaps extract and present to clients to help drive ideas for new use cases. Loving the Design-to-Code idea

https://digitalcontentnext.org/blog/2023/11/15/opportunities-and-risks-of-multimodal-ai-for-media/

There was a link in the previous article about prompt injection, really interesting.

https://secureweb.jpmchase.net/https://simonwillison.net/2023/Oct/14/multi-modal-prompt-injection/

As prep for the AI-900 exams I read through all the Azure ML docs some really great stuff being deployed, they have a good pipeline going with MS research. Prompt flow is really cool and will quickly democratize GenAI from DS to SWE when widely adopted

https://learn.microsoft.com/en-us/azure/machine-learning/?view=azureml-api-2&secureweb=OUTLOOK

Just ask Satya

Bill Gates thoughts on AI cant help but smile at the Matrix reference with Agents, call me Mr Anderson…..

https://www.gatesnotes.com/AI-agents?WT.mc_id=20231109150000_AI-Agents_BG-LI_&WT.tsrc=BGLI

This is very cool for search I can see this getting industry wide adoption

https://azure.microsoft.com/en-us/products/ai-services/ai-search/

Some new foundation models from Nvidia, if I was in the chatbot space would be looking at this

Azure is killing it at the moment.

Though AWS still got game

https://aws.amazon.com/blogs/aws/amazon-bedrock-now-provides-access-to-llama-2-chat-13b-model/

Word on street is it’s better to focus on building apps than foundation models

GPTS = Custom models for all and according to the Bill Gates article you are looking at the future of agents right here.

https://openai.com/blog/introducing-gpts

To be fair the weather apps are already pretty good compared to when I was a boy when it was mostly a rock on a string [If the rock is wet, it's raining. If the rock is swinging, the wind is blowing. If the rock casts a shadow, the sun is shining.]

Microsoft getting in on the AI chip game

Also murmurings from DeepMind about this so wondering what exact breakthroughs are bubbling under the hood right now

https://venturebeat.com/ai/openais-six-member-board-will-decide-when-weve-attained-agi/

GenAI and the Meno Paradox [In order for inquiry to succeed, you need to know what you are looking for so that you recognize it when you find it; but if you know what you are looking for, inquiry is unnecessary. This is "Meno's Paradox.”] I draw your attention to this line in the article that cuts across the use cases for code generation “The reality is that the effectiveness of AI coding assistants is dependent on the degree to which the developer has a clear understanding of good coding practices, the architecture of the application, and the functionality they aim to achieve.”

https://www.linkedin.com/pulse/generative-ais-meno-paradox-philip-walsh-phd-vgiae/

Clowns to the left of me, jokers to the right….

https://the-decoder.com/gpt-4-turbos-best-new-feature-doesnt-work-very-well/?amp=1

Good week for JPM Coin

AWS climbing an LLM mountain

Don’t stick your head in the sand when it comes to text to video generation

Meta has just announced two major milestones in their generative AI research: 'Emu Video' and 'Emu Edit

Emu Video, a novel text-to-video generation model, utilizes the Emu image generation model to produce high-quality videos from text-only, image-only, or combined text and image inputs. This model stands out for its factorized approach, enhancing the efficiency of training video generation models and delivering superior video quality. In fact, in human evaluations, 96% of respondents preferred the output quality of Emu Video over previous models.

Emu Edit brings a new level of precision to image editing. This model allows for free-form editing solely through text instructions, a significant departure from many existing models. It's designed to follow instructions with high precision, ensuring that only specified elements of the input image are edited while leaving other areas untouched. This specificity in editing provides a more powerful and reliable tool for image manipulation.

To support the development of Emu Edit, Meta has created a dataset containing 10 million synthesized samples, including input images, instructions, and targeted outputs. This makes it the largest dataset of its kind, contributing to Emu Edit's state-of-the-art performance in both qualitative and quantitative evaluations across various image editing tasks.

While still in the realm of fundamental research, these technologies from Meta show great promise for enhancing communication, sharing, and creative expression within their family of apps. The continued progress in this area of work is something to watch closely.

Old school going old school

Hugging Face has a lot of partners

https://venturebeat.com/ai/dell-and-hugging-face-partner-to-simplify-llm-deployment/

You learn from your mistakes, if they do a follow up called Battlestar Galactica then I am in [Best TV show of all time after The Sopranos in my opinion]

All empires fall, perhaps this is the start for Apple - Et Tu Ivey

https://kimbellard.medium.com/altman-ive-and-ai-oh-my-5e1d0db1d03b

Asking the big questions to GPT so you don’t have to, looking forward to the Meta

Tensor GPU architecture refers to the design and structure of graphics processing units (GPUs) that are specifically optimized for deep learning and neural network computations. The term "Tensor" in Tensor GPU architecture refers to the mathematical concept of tensors, which are multi-dimensional arrays commonly used in deep learning algorithms.

Overall, Tensor GPU architecture is designed to provide efficient and high-performance computing for deep learning tasks, making it a popular choice for researchers and practitioners in the field of artificial intelligence

I was going to make a joke about a Meta PyTorch chip but it does not work

Tensor and PyTorch are related but distinct concepts in the field of deep learning.

Tensor: A tensor is a mathematical object that represents multi-dimensional arrays of numerical values. Tensors are fundamental data structures used in deep learning frameworks to store and manipulate data. Tensors can have different dimensions, such as scalars (0-dimensional tensors), vectors (1-dimensional tensors), matrices (2-dimensional tensors), or higher-dimensional tensors. Tensors can be operated on using mathematical operations like addition, multiplication, and matrix operations.

PyTorch: PyTorch is an open-source deep learning framework that provides a high-level interface for building and training neural networks. It is built on top of the Torch library and is widely used for research and development in the field of artificial intelligence. PyTorch provides a dynamic computational graph, which allows for flexible and efficient execution of neural networks. It also offers automatic differentiation, which simplifies the process of computing gradients for training neural networks.

In summary, tensors are the fundamental data structures used in deep learning, while PyTorch is a deep learning framework that provides tools and functionalities for working with tensors and building neural networks. PyTorch makes it easier to define, train, and deploy deep learning models by providing a high-level API and a range of utilities for tasks such as data loading, model optimization, and visualization.

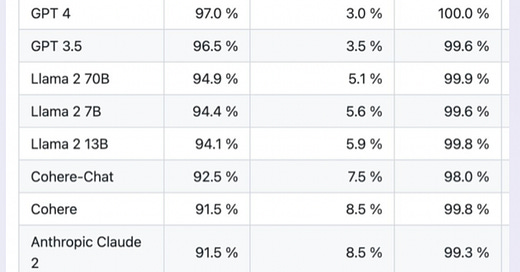

Hallucination rates in LLM